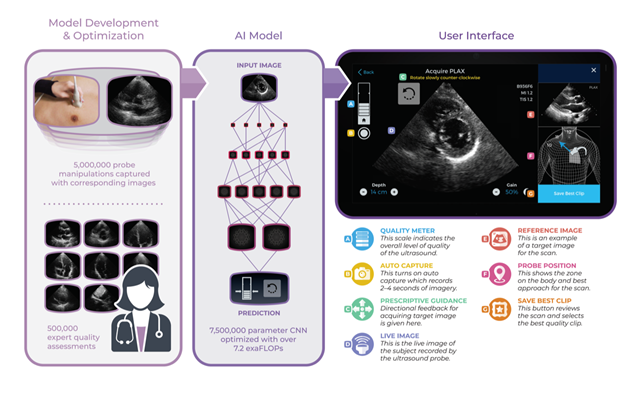

Last year, artificial intelligence (AI)-focused Caption Health Inc. won the U.S. FDA’s nod for software that guides untrained clinicians step-by-step in providing a cardiac ultrasound exam, a process normally performed by a highly skilled specialist. Now, the Brisbane, Calif.-based company has published data showing nurses without prior ultrasound experience who used Caption Guidance software captured ultrasound images of diagnostic quality to assess known cardiac conditions.

The FDA cleared Caption Guidance in February 2020, via the de novo pathway, enabling a broad range of health care workers to perform cardiac ultrasound examinations at the point of care. The Caption AI platform, which includes Caption Guidance and Caption Interpretation, is the only AI-guided medical imaging acquisition software to have FDA authorization.

Clinical results

The study – which formed the basis for the FDA’s 2020 decision – included 240 patients ages 20 to 91. Of those, 42% were female, 17.6% were Black or African American and 33% had a body mass index (BMI) of 30 or greater. Each patient underwent paired ultrasounds, one with a nurse and one with a registered diagnostic cardiac sonographer. Cardiologists reviewed the images for diagnostic quality and also made diagnostic assessments. The primary endpoints were qualitative judgment about left ventricular size and function, right ventricular size and the presence of pericardial effusion.

For the diagnostic assessments of the primary endpoints, there was at least 92.5% agreement between nurse and sonographer scans. Specifically, the results showed that, when using Caption Guidance, images captured by nurses with no previous training or ultrasound experience and reviewed by experienced cardiologists were of high enough quality to assess left ventricular size and function in 98.8% of patients, right ventricular size and function in 92.5% of patients and presence of pericardial effusion in 98.8% of patients.

The results also supported Caption Guidance’s benefit and utility in real-world clinical practice. Scheduled full echocardiograms performed within two weeks of the study found cardiac abnormalities in more than 90% of the patients. The results were consistent across sex, race and BMI.

The study was published Feb. 18, 2021, in JAMA Cardiology.

“In our mission to democratize access to health care and quality medical imaging, we wanted to ensure that we tested Caption Guidance on a wide range of patients to prove its effectiveness across a diverse population,” said Yngvil Thomas, Caption Health’s chief of medical affairs and clinical development. “This study shows that AI-guided imaging can expand health care professionals’ skill set in a meaningful way with minimal training – giving patients more opportunities to receive timely diagnostic care.”

Real-world impact

The goal with Caption AI is not to replace skilled sonographers but to expand the number of health care professionals who can perform focused cardiac ultrasound at the point of care.

“The study’s remarkable agreement between nurses’ scans and sonographers’ scans shows that the use of AI like Caption Guidance could fundamentally change how we use medical imaging,” said Akhil Narang, a cardiologist at Northwestern Medicine and lead author on the paper. “This will extend the abilities of health care providers to evaluate for different pathologies in critical care, emergency departments and other settings – and perhaps identify them even earlier with the assistance of AI.”

The AI-based technology could also help to reduce exposure of sonographers to COVID-19, which can cause lasting damage to the heart. Some hospitals are already using it to assess and treat heart implications in COVID-19 patients, and it is quickly becoming the standard of care in critical care settings, the company said.

In terms of getting health care professionals up to speed, Charles Cadieu, CEO and co-founder of Caption Health, said it takes just days to learn how to use Caption Guidance. “For this study, nurses completed one hour of didactic … training followed by nine practice scans before scanning in the study,” he told BioWorld. The total time of training was under 12 hours, or less than two business days, for all nurse users. By contrast, the American Society of Echocardiography guidelines for sonographer education call for more than a year of training and clinical internship.

While the more intense training produces sonographers who can perform much more comprehensive studies than Caption AI, “there is a shortage of professionals with this level of training. That shortage, in turn, limits the ability to acquire imaging at the point of care,” Cadieu said.

Commercial push

Last summer, the company closed a $53 million series B funding round to further develop and commercialize its AI-guided ultrasound technology. We’re continuing to make progress scaling up commercial efforts and building out the organization,” Cadieu said.

The company announced its first commercial partner, Northwestern Medicine, in October and is currently implementing Caption technology at a number of major hospitals and health systems across the U.S.

The Caption Guidance software currently can be used with the Terason uSmart 3200T Plus full-service ultrasound system by Teratech Corp., but the company plans to expand the range of compatible hardware in the near future.

It is also looking to expand its pipeline with new applications. In November, the company received a $4.95 million grant from the Bill & Melinda Gates Foundation to develop AI technology for lung ultrasound, with the intent of increasing diagnosis of pneumonia in young children in resource-limited settings, as well as for COVID-19 patients.

The company also has a new version of Caption Interpretation, which helps clinicians gain quick, easy and accurate measurements of cardiac ejection fraction (EF) at the point of care. The updated version, which won FDA clearance last year, automatically calculates EF from three ultrasound views: apical 4-chamber, apical 2-chamber and the readily obtained parasternal long-axis view.